Two-Step task

This is an implementation of the well known two-step task [Daw et al., 2011].

The task has often been used to investigate the relationship between model-based and model free reinforcement learning processes in humans.

In the experiment, we use the starting points of probabilities described in [Nussenbaum et al., 2020], but with a separate drift. For their implementation of the task, see their GitHub repo

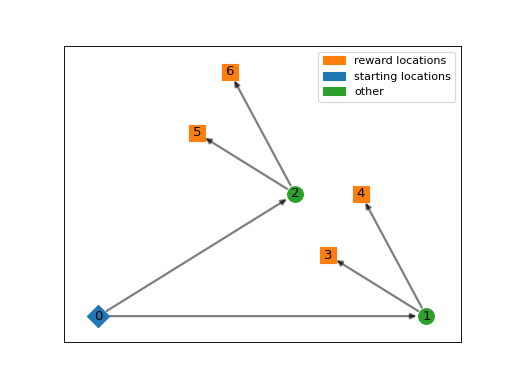

For this project the graph structure is the following:

Or in graph form:

(Source code, png, hires.png, pdf)

Task description

In this task, participants are asked to make two subsequent decisions.

In the first decision the participant selects between one of two environments, by pressing either left or right.

After the selection the participant enters the second environment, where they make again a left or right choice between a gamble. The gamble has a set reward probability at the beginning, but drifts slowly over time.

Because the task has no set conditions, there is only a single starting position and no further conditions that can be controlled from the outside.

Nathaniel D. Daw, Samuel J. Gershman, Ben Seymour, Peter Dayan, and Raymond J. Dolan. Model-Based Influences on Humans' Choices and Striatal Prediction Errors. Neuron, 69(6):1204–1215, March 2011. doi:10.1016/j.neuron.2011.02.027.

Kate Nussenbaum, Maximilian Scheuplein, Camille V. Phaneuf, Michael D. Evans, and Catherine A. Hartley. Moving Developmental Research Online: Comparing In-Lab and Web-Based Studies of Model-Based Reinforcement Learning. Collabra: Psychology, 6(1):17213, November 2020. doi:10.1525/collabra.17213.