Go / No-Go

This is a reimplementation of the Go / No-Go task described in [Guitart-Masip et al., 2012].

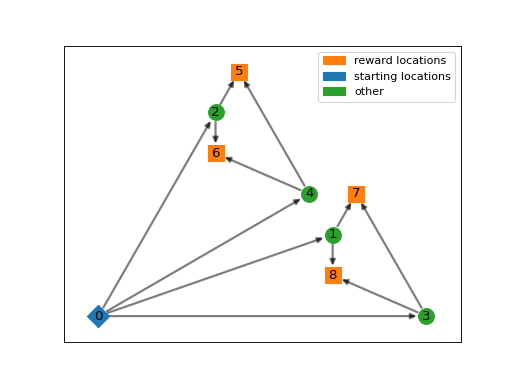

For this project the graph structure is the following:

Or in graph form:

(Source code, png, hires.png, pdf)

Task description

In this task, participants are asked to respond to a target in time, or to withhold their response.

First they are presented with a cue, the condition and response-type. There are two conditions: punishment or reward and two response-types go or no-go.

After the cue the participant needs to press space in response to a target stimulus, or to withhold their response.

Depending on their action, the participant receives a probabilistic reward, or probabilistic punishment.

As can be seen in the graph, the task is controlled by the agent_location

or starting_position in that each cueing condition, has a separate graph

structure.

Marc Guitart-Masip, Quentin J.M. Huys, Lluis Fuentemilla, Peter Dayan, Emrah Duzel, and Raymond J. Dolan. Go and no-go learning in reward and punishment: Interactions between affect and effect. NeuroImage, 62(1):154–166, August 2012. doi:10.1016/j.neuroimage.2012.04.024.